Exploratory Data Analysis and Machine Learning with medical data

Recent advancements in data science render scientists capable of sorting, managing and analyzing large amounts of data more effectively and efficiently. One of the sectors where these new technologies have been applied is the healthcare industry, which is increasingly becoming more data-reliant due to vast quantities of patient and medical data. As a result, medical practices and patient care are significantly improved.

- In this notebook, we will implement various techniques regarding the Exploratory Data Analysis (EDA) of medical data from different datasets and train various machine learning models for the prediction of important medical factors.

Exploratory Data Analysis

1st Dataset

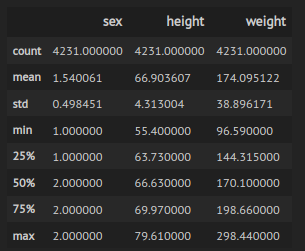

The first investigated dataset consists of several thousand measurements of height and weight of males and females. The main information of the imported dataset can be seen below:

Figure 1: Basic statistics of imported dataset.

We observe that the dataset contains 4231 measurements. We can get more detailed information about the values of each sex:

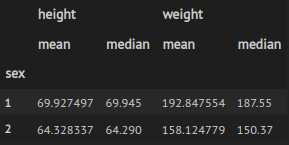

Figure 2: Mean and median height and weight per sex.

By looking at the mean and median values, we can conclude that the values of sex=1 correspond to men’s height and weight.

Data visualisation

A scatter plot is normally fairly informative and very fast to plot.

Figure 3: Scatter plot that indicates the sex of each person in each point.

We can see again that most of the women’s weight and height values are mostly smaller than those of men.

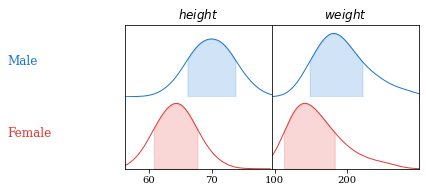

We can plot the data based on the sex of each person by taking into account the mean and standard deviation of height and weight.

Figure 4: Scatter plot that indicates the sex of each person in each point.

Figure 5: Scatter plot that indicates the sex of each person in each point.

As we can notice in the plots above, mean height and weight of men are greater than those of women.

2nd Dataset

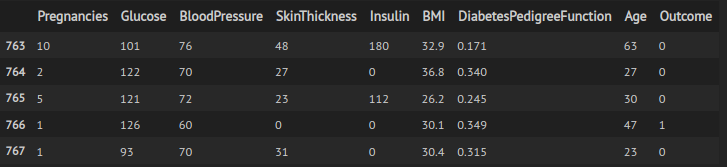

The second dataset of this analysis concerns certain diagnostic measurements originated from the National Institute of Diabetes and Digestive and Kidney Diseases Diabetes dataset. This dataset was obtained from Kaggle. More specifically, all patients here are females at least 21 years old of Pima Indian heritage. The dataset consists of several medical predictor variables and one target variable, Outcome. Predictor variables includes the number of pregnancies the patient has had, their BMI, insulin level, age, and so on.

As a first step, let’s explore the imported dataset.

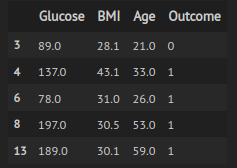

Figure 6: Part of the second dataset.

We observe that there are rows with zero values, thus we can ommit these inputs. After that, we can also get a better summary of the imported data.

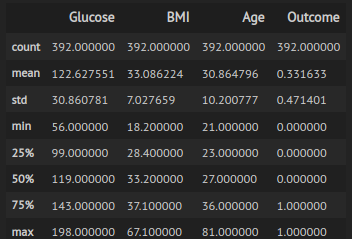

Figure 7: Summary of second Diabetes dataset

Now, the remaining rows are 392.

Data visualisation

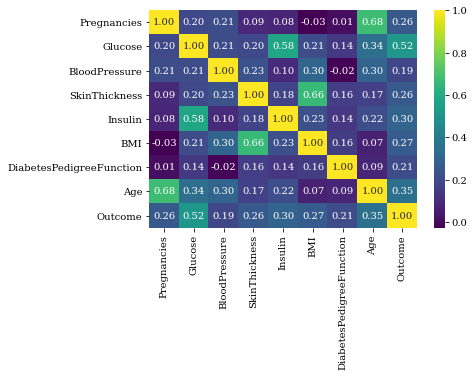

We can explore the correlation of the imported data through a heatmap.

Figure 8: Summary of second Diabetes dataset

In a heatmap like this, the more correlation values are closer to 1 or -1, the more correlated these variables are. For instance, Age and pregnancies are much correlated since the correlation value is 0.68.

Training of machine learning model

Now, let’s keep only three variables that are much correlated with the outcome of the dataset( Diabetes/not Diabetes).

Figure 9: Fraction of dataset used for ML training

We can explore the basic statistics of this dataset.

Figure 10: Statistics of dataset used for ML training

Creation of machine learning model in order to predict diabetes cases based on unseen data

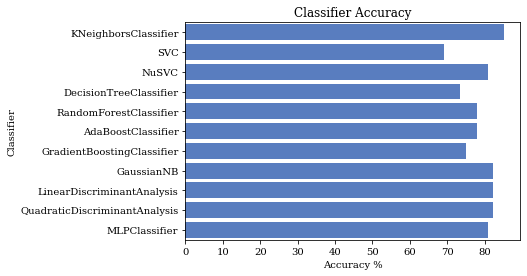

By using the above narrowed dataset we trained several models based on various classification algorithms, namely:

- K-Nearest Neighbours

- Support Vector Classifier

- Nu Support Vector Classifier

- Decision Trees

- Random Forest

- AdaBoost

- Gradient Boosting

- Gaussian Naive Bayes

- Linear Discriminant Analysis

- Quadratic Discriminant Analysis

- Multilayer Perceptron.

Figure 11: Accuracies of the trained models

According to the calculated accuracies, the best model was K Nearest Neighbour.

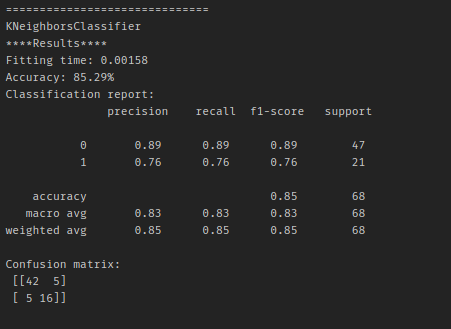

Figure 12: Details of model trained with K Nearest Neighbour algorithm

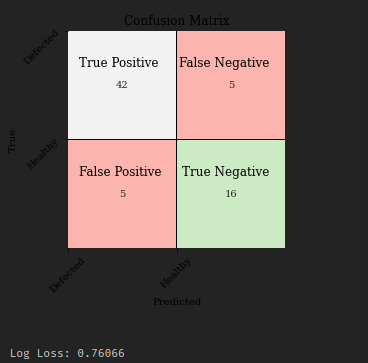

Figure 13: Classification matrix of model trained with K Nearest Neighbour algorithm

Based on the classifiers’ accuracy, the trained model that performed the best accuracy was K Nearest Neighbour classifier with accuracy = 85.29%, by showing low Fitting time: Fitting time= 0.00158 sec. This model, however, showed high log loss : 0.76.

Best model

| Best Model | Accuracy (%) | Log Loss | Fitting Time (s) |

|---|---|---|---|

| K Nearest Neighbour | 85.29% | 0.76 | 0.00158 sec |

A more detailed description of this project’s implementation in Python can be seen in this github repository: Link to Github repository